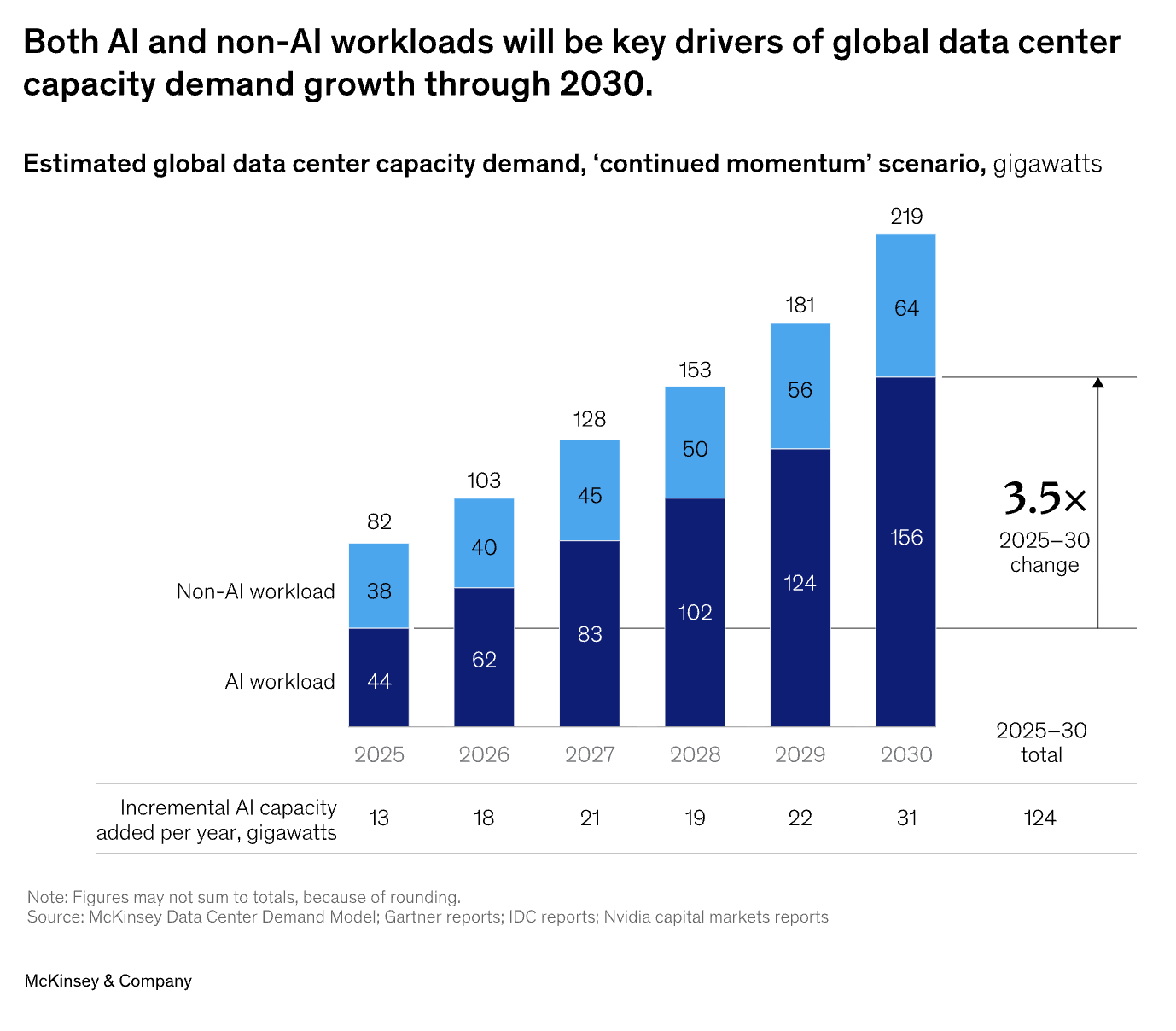

Building AI infrastructure is getting expensive, really expensive. McKinsey's latest analysis shows that companies will need to invest around $6.7 trillion in data centers by 2030 just to keep up with AI demand. That's a staggering number, and it's created a race that only the biggest tech companies can realistically afford. But there's another way. Companies like Aethir are building decentralized GPU networks that let enterprises tap into shared computing power without having to build and own their own data centers. It's a fundamentally different approach, and it might be the only sustainable one for most businesses.

The Problem With Traditional Data Centers

Source: McKinsey & Company

Here's the thing about AI: it needs GPUs. Lots of them. These processors were originally built for graphics, but they're perfect for training AI models and running inference at scale. That's why everyone suddenly needs access to high-end GPUs like NVIDIA's H100s, and why data centers have become the new gold rush.

But relying on the big tech cloud providers like AWS, Google Cloud, and Microsoft Azure comes with real headaches.

- First, there's the cost. GPU rental prices are already steep and climbing.

- Second, there are long waits. If you want access to the latest hardware, you might be waiting 18-24 months just to get your hands on it.

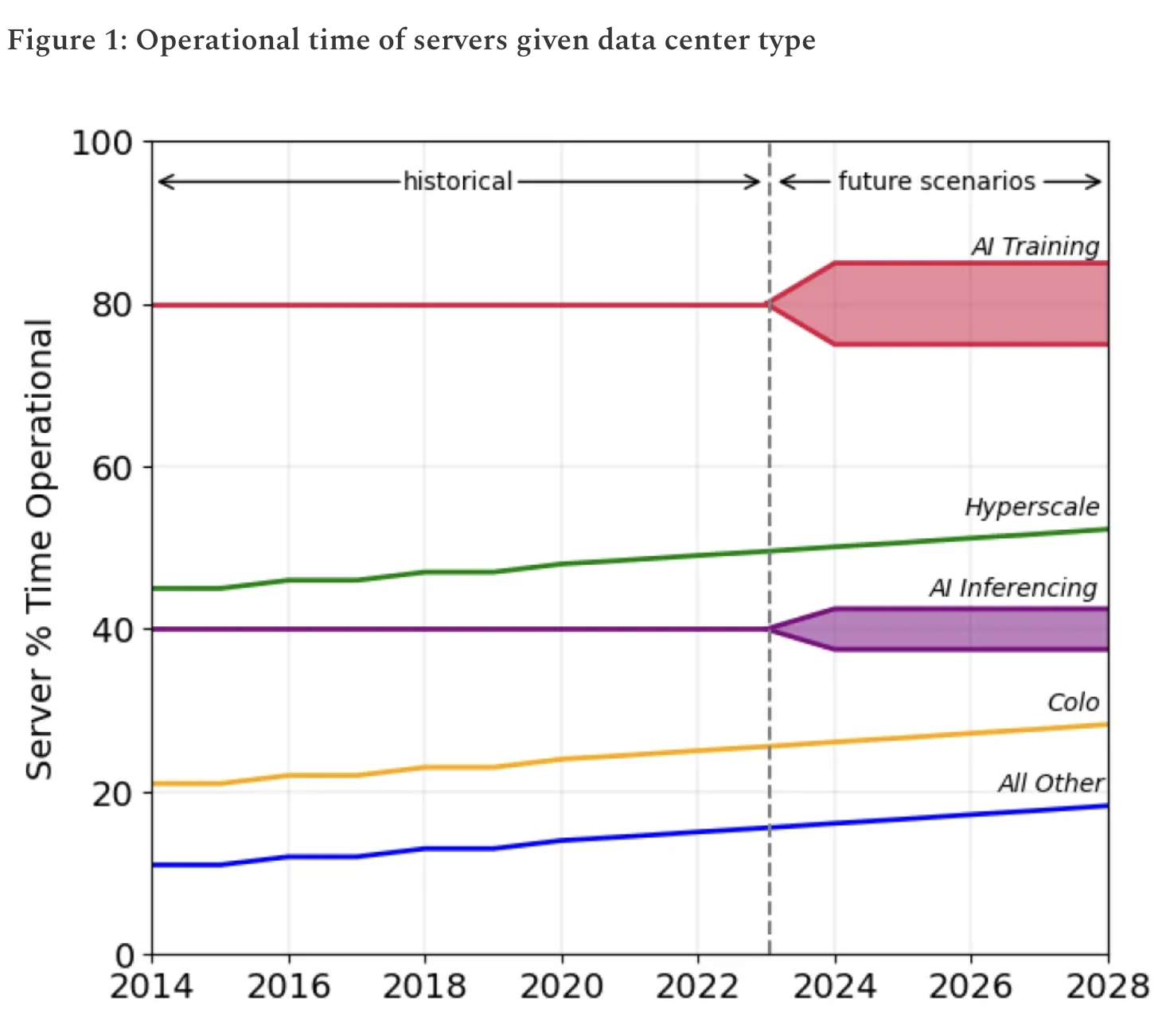

- Third, and this is the kicker: most of these GPUs sit idle. Research shows that GPU utilization in traditional data centers hovers between 30-50%. That means companies are paying for hardware that's not even being used. Studies have found that more than half of all GPUs are sitting idle at any given time, that's a massive waste of money.

Add in vendor lock-in and surprise data egress fees, and you've got a system that's broken for anyone who isn't a hyperscaler.

The infrastructure problems go deeper than just cost. Deloitte surveyed 120 power and data center executives in 2025, and the results paint a grim picture. Grid stress is the top challenge, 79% of respondents said AI will spike power demand through 2035. Supply chain issues are holding up projects (65% cited this as a problem), and security concerns are real (64%). There's also a seven-year wait to connect new data centers to the grid in some places.

That's not a bottleneck, that's a wall.

A Different Approach: Aethir’s Decentralized GPU Cloud Computing

Source: Power and Policy

What if you didn't have to build a data center at all? That's the idea behind decentralized GPU-as-a-Service cloud networks. Instead of relying on one company's infrastructure, these networks tap into idle GPUs from data centers, mining operations, and other sources around the world. It's like Airbnb, but for computing power.

The benefits are pretty straightforward. You can access GPUs at a fraction of what the big cloud providers charge, sometimes 60-80% cheaper. You can scale up or down as needed without massive upfront investments. And smaller companies finally get access to enterprise-grade hardware, which levels the playing field for AI innovation.

Grayscale Research has done deep analysis on decentralized physical infrastructure networks, and they've identified the key advantages: no middleman taking a cut, better resilience (no single point of failure), lower overhead costs, and everyone's incentives are aligned. A16z Crypto's research on the topic points out that decentralized networks can actually democratize AI development. Right now, only OpenAI, Microsoft, and a handful of others can afford to train large models. Decentralized compute changes that equation.

How Aethir Is Building This

Aethir isn't just talking about decentralized GPU computing, it’s actually doing it. The platform connects companies that need GPU power with "Cloud Hosts" who have hardware to share. The result is a network of over 435,000 GPU containers that can handle serious workloads.

For companies using Aethir, the pitch is simple: get access to top-tier GPUs (including H100s) without buying or building anything.

- No capital expenditure on data centers.

- No vendor lock-in. Global infrastructure across 93+ countries means low latency wherever you are.

- Pricing is transparent, with no hidden egress fees: 86% cheaper than Google Cloud.

For the people providing the hardware, the Cloud Hosts, there's a real incentive to participate. They can monetize idle GPUs and earn revenue from the network in ATH tokens. Unlike traditional models where hardware sits unused, Cloud Hosts get paid for contributing their resources to the Aethir ecosystem.

Why This Matters Now

The traditional data center arms race is unsustainable. It's a game only a few companies can win. For everyone else, startups, researchers, and smaller enterprises, the future has to look different.

Aethir is proving that you don't need to own infrastructure to have access to world-class computing power. You don't need to wait years for grid connections or deal with supply chain nightmares. You just plug into a global network.

As AI continues to grow and demand for compute keeps climbing, decentralized networks like Aethir's won't be a nice-to-have, they'll be essential. Aethir is making AI infrastructure accessible, affordable, and sustainable. That's not just good business. That's the future.

Explore Aethir’s enterprise AI GPU-as-a-Service infrastructure offering here.

Apply to become an Aethir Cloud Host and start monetizing your idle GPUs by filling this form.

.jpg)

_Blog_1910x1000_V3.jpg)