Key Takeaways

- AI workflow platforms transform fragile AI model demos into reliable, repeatable production-scale AI workflows.

- Scalable AI content production pipelines require generative AI infrastructure built for sustained AI inference at scale, not one-off outputs.

- The true bottleneck for AI workflow scalability is access to elastic GPU compute for generative AI, not model capability.

- Aethir’s decentralized GPU cloud enables scalable AI inference by removing centralized cloud constraints on throughput, latency, and cost.

The AI Demo Issue: Why Impressive Models Don’t Equal Scalable Products

Visually impressive AI content demos are everywhere, showcasing how a single prompt can yield stunning face-swap videos, melting pizzas falling on people’s faces, and static images turning into extravagant party scenes. Such AI model demos are great for retail social media accounts, viral content, and early adoption, but they quickly break down when it comes to real usage in content pipelines. That’s because AI model demos aren’t the same as production-scale AI workflows. They aren’t easily repeatable and scalable. Each new content iteration requires additional GPU compute, and without standardized workflow settings, creators need to iterate frequently.

This creates excessive GPU compute costs for generative AI, especially for commercial creators such as influencers, marketing agencies, and creative studios that require polished, production-ready AI image and video content. Demos are essentially one-off inference products with low concurrency and no guarantees for future output regarding latency, availability, or cost stability.

That’s where standardized production-scale AI workflows offer a viable alternative for commercial creators. With AI workflow platforms, creators can optimize AI image and video production for reliability and predictability, allowing them to integrate generative AI infrastructure into their content pipelines.

Let’s explore how production-ready AI image and video workflows support content creators, and learn why Aethir’s decentralized GPU cloud is positioned as a key infrastructure support pillar for AI workflow platforms.

What Production Actually Means for AI Content Creation

The production process for AI content creation is defined by repeatability, predictability, and throughput. If a specific output isn’t repeatable, predictable, and cannot be produced at scale, it’s actually an AI model demo, not a production-ready AI image or video piece.

In fast-paced environments such as marketing agencies or influencer social media platforms, production-ready AI content is essential for successfully integrating AI functionalities in existing content pipelines. If an AI image or video requires numerous iterations, customization, and incurs high costs to produce a high-quality end product, then it isn’t production-ready.

Experimenting with AI model demos is excellent when there aren’t any deadlines. Still, in fast-paced commercial settings, where, let’s say, marketing agencies need a series of ready-to-use AI product videos, demos aren’t a viable solution. The videos need to be consistent and repeatable at scale, especially for long-term marketing campaigns that require a consistent brand identity and quality.

The key real-world requirements AI model demos don’t address include:

- Hundreds or thousands of concurrent users

- Batch generation and iteration loops

- Consistent output quality (characters, styles, motion)

- Time-sensitive workloads (campaigns, launches, trends)

This is something one-prompt raw AI tools can’t offer. Every time you use a one-prompt tool, there’s a high chance that you’ll get a slightly different output type, quality, and consistency. Professional content creators can’t rely on such tools for AI content production pipelines.

How AI Workflow Platforms Solve What Demos Can’t

Unlike raw AI tools that produce output based on a one-off prompt, AI workflow platforms use complex chains of tightly connected AI tools, optimized to deliver a specific result. While raw AI tools deliver unique results and can’t be optimized to consistently produce the same output, an AI workflow platform offers a viable solution for production-ready AI images and videos that can be reproduced.

This enables creators to scale their content production with AI automation, knowing that the output will be consistent. Imagine creating hundreds of visually consistent brand images and short videos with a single AI workflow platform, instead of using a bunch of different tools that require extensive design expertise or even coding skills.

AI workflow platforms make this possible today, leveraging high-performance GPUs to onboard thousands of users worldwide.

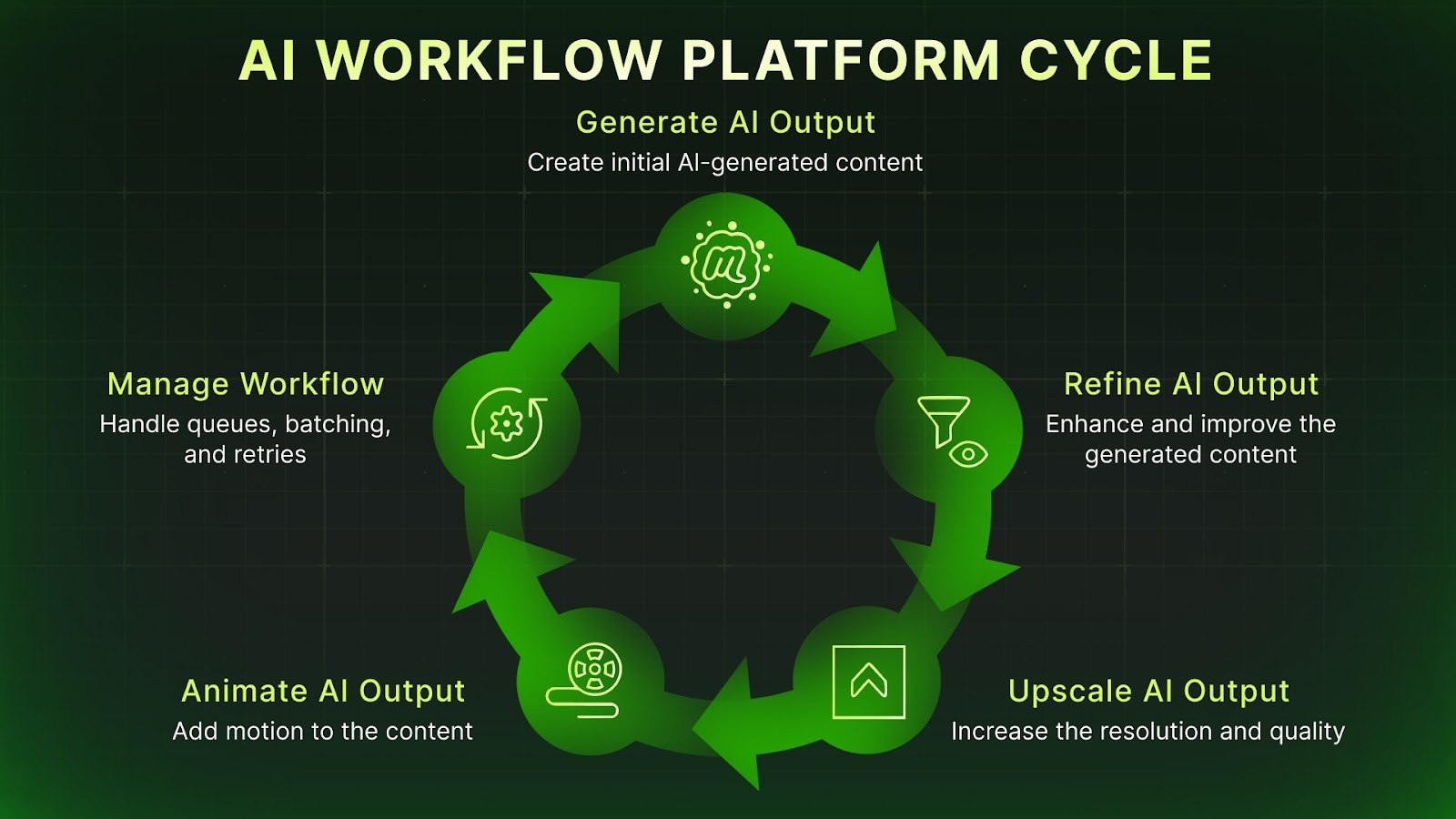

An AI workflow platform wraps raw AI models in production logic, offering:

- Multi-step pipelines (generate, refine, upscale, animate)

- Control layers (templates, presets, seeds, masks, motion)

- Queues, batching, retries, and concurrency management

By implementing AI workflows, instead of using unconnected raw AI tools that produce demos, creators gain access to:

- Faster iteration

- Better consistency

- Lower marginal cost per asset

This way, AI workflow platforms turn AI content creation tools into usable generative AI infrastructure rather than experimental technology.

The Real Scaling Bottleneck: Infrastructure, Not Models

To provide creators with reliable, reusable AI content production pipelines, AI workflow platforms need streamlined access to premium-quality GPU infrastructure. Most AI workflow platforms already have access to a wide range of popular AI models, such as Sora, Kling, NanoBanana, and Veo. However, the actual scaling bottleneck lies in the availability of GPU infrastructure.

The type, cost, and flexibility of the underlying GPU compute for generative AI workflow platforms determines:

- GPU availability

- Inference latency under load

- Cost predictability

- Scalability based on geographic proximity to users

Currently, GPU compute for generative AI is mainly provided by large-scale, centralized hyperscaler services such as AWS, Google Cloud, and Azure. Centralized cloud providers struggle to deliver cost-efficient compute for AI innovators due to fixed capacity, expensive burst scaling, and usage quotas that result in service throttling during peak hours.

For AI workflow platforms, this results in expensive, inefficient services and rigid contracts that carry vendor lock-in risks.

Slower compute-consumption queues lead to lower output quality, ultimately limiting scalability for AI workflow platforms that depend on centralized hyperscaler clouds.

Production scale is an infrastructure problem for AI workflow platforms, not a limitation of AI technology, because the tech required for production-ready AI images and videos already exists.

How Aethir’s Decentralized GPU Cloud Supports Production-Grade AI Workflows

AI workflow platforms that offer production-ready image and video content creation are becoming high-value tools for content creators. Still, they need scalable AI inference to support the industry’s growing appetite for AI content.

Aethir’s decentralized GPU cloud offers a different approach to GPU compute for generative AI than centralized hyperscalers. Our decentralized GPU cloud network spans 200+ locations across 94 countries and nearly 440,000 high-performance GPU Containers. Aethir’s GPU fleet includes thousands of scalable AI inference chips, including NVIDIA H100s, H200s, B200s, and other premium quality GPUs for advanced AI tasks.

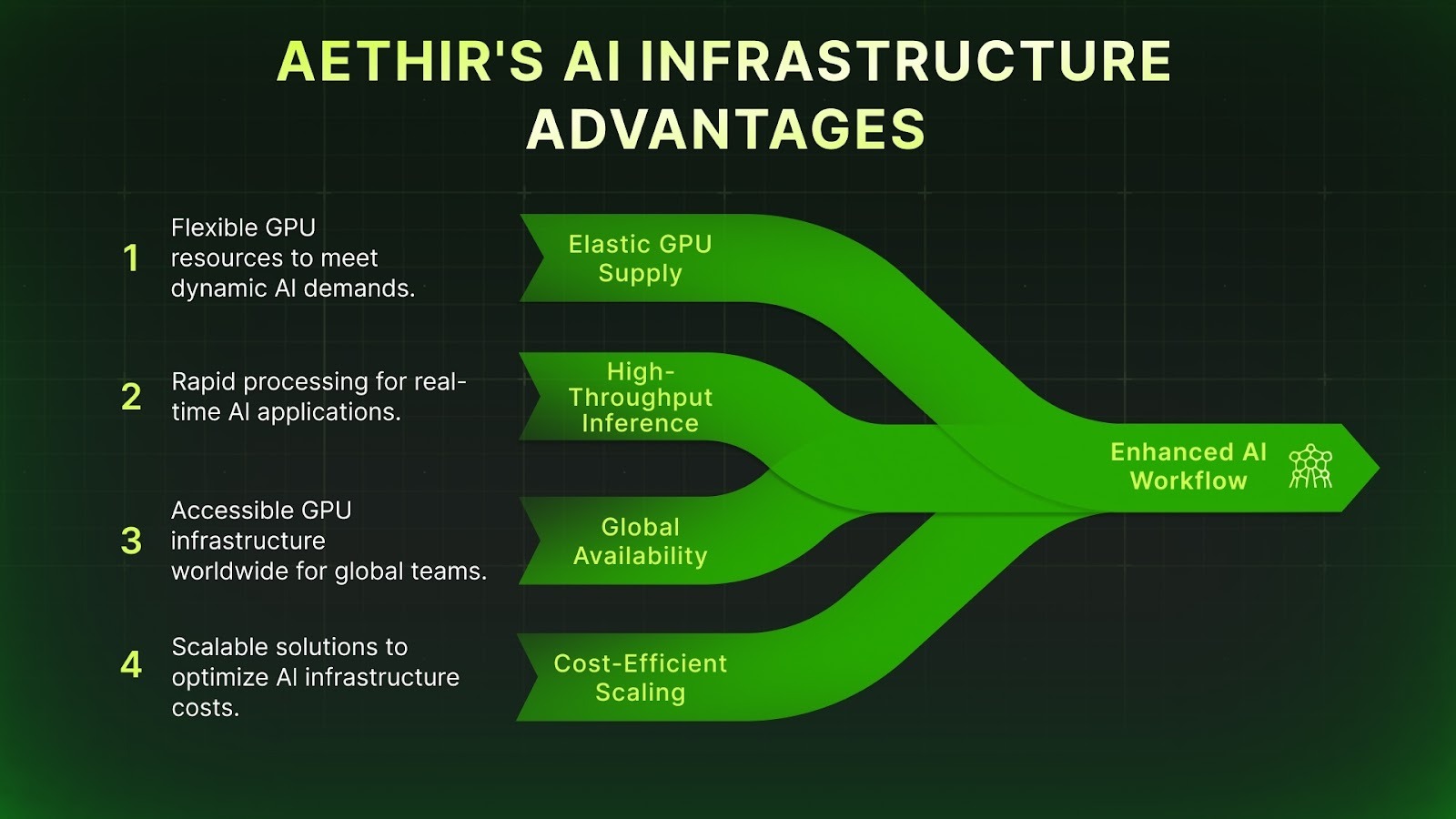

Aethir provides essential GPU infrastructure advantages for AI workflow platforms:

- Elastic GPU supply

- High-throughput inference

- Global availability

- Cost-efficient scaling

Decentralized GPU cloud infrastructure distributes capacity, eliminating centralized cloud choke points, making it burst-ready for peak creator demand hours, perfect for new workflow launches and user base growth. Additionally, the global distribution of GPU infrastructure enables Aethir’s decentralized GPU cloud to offer lower latency by positioning GPUs closer to end users.

Unlike centralized hyperscalers, Aethir is built to support always-on AI inference workloads, ideal for platforms that need to scale workflows, not just demos.

Learn more about Aethir’s decentralized GPU cloud infrastructure in our dedicated blog section.

Explore Aethir’s GPU compute offering for AI enterprises here.

FAQs

Why aren’t AI model demos suitable for real-world products?

AI model demos showcase possibilities but lack repeatability, concurrency, and cost control, making them unsuitable for production-scale AI workflows and AI inference at scale in real content environments.

How are AI workflow platforms different from one-prompt AI tools?

AI workflow platforms turn isolated AI model demos into structured AI content production pipelines that support batching, consistency, and long-term AI workflow scalability.

What infrastructure is required for scalable AI content creation?

Reliable generative AI infrastructure depends on GPU compute for generative AI that can deliver scalable AI inference without latency spikes or cost instability.

Why is a decentralized GPU cloud better for AI inference at scale?

A decentralized GPU cloud removes centralized infrastructure bottlenecks, enabling global, elastic AI inference at scale for workflow platforms running continuous production workloads.

.jpg)